Securing your Kubernetes workloads with Sigstore

Date: 2023-07-20

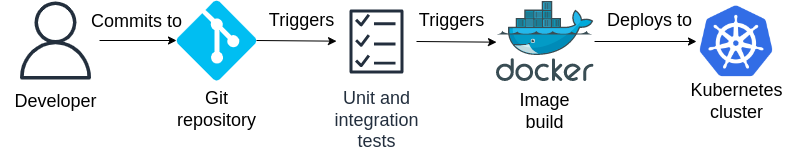

Consider your typical CI/CD pipeline as shown below. What are some of the issues associated with the DevOps workflow below, if any?

The main issue is that security measures are not integrated into the pipeline as a first-class citizen. Let’s assume the best case where the Kubernetes cluster hosting the production workloads is reasonably secured as an afterthought. In this case, a malicious actor seeking to compromise the cluster might, failing to gain access to the nodes themselves, attempt to gain access to the Pods running the workloads directly instead as a starting point. However, this vector of attack is not terribly effective - since Pods themselves are ephemeral and are constantly being recreated, the attacker would likely have a hard time maintaining access to the infected workload, let alone navigating their way within the cluster.

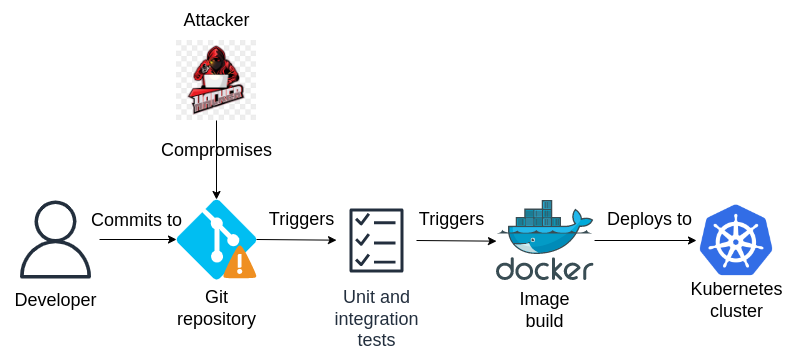

Instead, what an attacker might do is attempt to compromise the production workload(s) straight from the source, by finding ways to inject vulnerabilities and/or malware directly into the source code itself (e.g. by first gaining access to the developer’s workstation or laptop) or infecting the build environment where images are generated - this is commonly known as a software supply chain attack. As a result, the compromised application image makes its way to production as a Kubernetes Deployment which is deployed to the cluster - since the image itself is already infected, all Pods created and re-created from that Deployment are guaranteed to be infected, easing the attacker’s burden of maintaining access to that infected workload and subsequently working their way around the cluster.

To prevent software supply chain attacks like the one shown above, security measures should be proactively integrated into the pipeline as a first-class citizen and DevOps professionals should integrate security practices into their day-to-day duties - this practice of integrating security into DevOps is also known as DevSecOps.

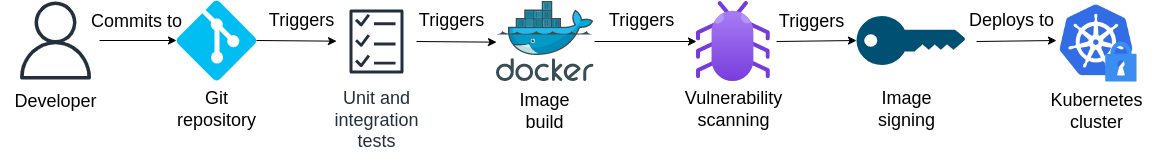

For example, consider the following DevSecOps pipeline with automated vulnerability scanning and image signing built-in. The idea is that the image will be automatically signed and deployed to the cluster only if vulnerability scanning reports that the image does not contain malware and/or high-severity vulnerabilities; otherwise the image is rejected. The cluster also enforces a policy such that only workloads whose images contain the appropriate signature(s) can be deployed to the cluster, to prevent attackers from circumventing the pipeline and attempting to deploy unsigned (potentially infected) images directly to the cluster.

In the lab that follows, we will introduce an open source project designed for DevSecOps workflows and dedicated to improving software supply chain security - Sigstore.

Lab: Sign and verify your workloads with Sigstore

Prerequisites

A basic understanding of Kubernetes is assumed. If not, consider enrolling in LFS158x: Introduction to Kubernetes, a self-paced online course offered by The Linux Foundation on edX at no cost.

Setting up your environment

You’ll need a Linux environment with at least 2 vCPUs and 4G of RAM. The reference distribution is Ubuntu 22.04 LTS, though the lab should work on most other Linux distributions as well with little to no modification.

We’ll set up the following tools:

Installing Docker

Docker (hopefully) needs no introduction - simply install it from the system repositories and add yourself to the docker group:

sudo apt update && sudo apt install -y docker.io

sudo usermod -aG docker "${USER}"

Log out and back in for group membership to take effect.

Installing Cosign

Cosign is a command-line tool to sign and verify container images and part of the Sigstore project dedicated to improving software supply chain security.

Let’s first create a user-specific directory for storing binaries and add it to our PATH so subsequent installation of software will not require sudo:

mkdir -p "$HOME/.local/bin/"

echo "export PATH=\"\$HOME/.local/bin:\$PATH\"" >> "$HOME/.bashrc"

source "$HOME/.bashrc"

Now fetch the cosign binary from upstream and make it executable - we’ll be using version 2.1.1:

wget -qO "$HOME/.local/bin/cosign" https://github.com/sigstore/cosign/releases/download/v2.1.1/cosign-linux-amd64

chmod +x "$HOME/.local/bin/cosign"

Installing kind

kind is a conformant Kubernetes distribution which runs entirely in Docker and is great for development, testing and educational purposes.

Let’s fetch kind from upstream and make it executable - we’ll be using version 0.20.0:

wget -qO "$HOME/.local/bin/kind" https://github.com/kubernetes-sigs/kind/releases/download/v0.20.0/kind-linux-amd64

chmod +x "$HOME/.local/bin/kind"

Installing kubectl

kubectl is the official command-line tool for interacting with Kubernetes clusters.

Let’s fetch kubectl from upstream and make it executable - we’ll be using version 1.27.3:

wget -qO "$HOME/.local/bin/kubectl" https://dl.k8s.io/release/v1.27.3/bin/linux/amd64/kubectl

chmod +x "$HOME/.local/bin/kubectl"

You might also find it useful to enable Bash completion for kubectl, which can save you quite a bit of typing:

echo "source <(kubectl completion bash)" >> "$HOME/.bashrc"

source "$HOME/.bashrc"

Care must be taken to match the kubectl (client) version and Kubernetes cluster (server) version - in particular, the client must not fall behind the server by more than 1 minor version. We chose v1.27.3 for our kubectl client since kind v0.20.0 corresponds to Kubernetes version 1.27.3.

Installing Helm

Helm is the official package manager for Kubernetes - it is effectively “orchestration for orchestration”.

Let’s fetch the archive from upstream and move helm to our PATH - we’ll be using version 3.12.2:

wget https://get.helm.sh/helm-v3.12.2-linux-amd64.tar.gz

tar xvf helm-v3.12.2-linux-amd64.tar.gz

mv linux-amd64/helm "$HOME/.local/bin/helm"

rm -rf helm-v3.12.2-linux-amd64.tar.gz linux-amd64/

Verifying everything is installed correctly

Run the following commands to check the version of each tool we just installed:

docker --version

cosign version

kind --version

kubectl version --client

helm version

Sample output:

Docker version 20.10.21, build 20.10.21-0ubuntu1~22.04.3

______ ______ _______. __ _______ .__ __.

/ | / __ \ / || | / _____|| \ | |

| ,----'| | | | | (----`| | | | __ | \| |

| | | | | | \ \ | | | | |_ | | . ` |

| `----.| `--' | .----) | | | | |__| | | |\ |

\______| \______/ |_______/ |__| \______| |__| \__|

cosign: A tool for Container Signing, Verification and Storage in an OCI registry.

GitVersion: v2.1.1

GitCommit: baf97ccb4926ed09c8f204b537dc0ee77b60d043

GitTreeState: clean

BuildDate: 2023-06-27T06:57:11Z

GoVersion: go1.20.5

Compiler: gc

Platform: linux/amd64

kind version 0.20.0

WARNING: This version information is deprecated and will be replaced with the output from kubectl version --short. Use --output=yaml|json to get the full version.

Client Version: version.Info{Major:"1", Minor:"27", GitVersion:"v1.27.3", GitCommit:"25b4e43193bcda6c7328a6d147b1fb73a33f1598", GitTreeState:"clean", BuildDate:"2023-06-14T09:53:42Z", GoVersion:"go1.20.5", Compiler:"gc", Platform:"linux/amd64"}

Kustomize Version: v5.0.1

version.BuildInfo{Version:"v3.12.2", GitCommit:"1e210a2c8cc5117d1055bfaa5d40f51bbc2e345e", GitTreeState:"clean", GoVersion:"go1.20.5"}

You can safely ignore any warnings printed to the console. As long as there are no errors, you should be good to go :-)

Sign and verify your first image with Cosign

Before we sign and verify our first image, we’ll need to build one and push it to Docker Hub (or another registry of your choice).

Build and push our app to Docker Hub

Let’s create a project folder hello-cosign/ for building our image:

mkdir -p hello-cosign/

pushd hello-cosign/

We’ll base our “app” on Apache, a popular web server. Run the command below to fill in our Dockerfile:

cat > Dockerfile << EOF

FROM httpd:2.4

COPY ./public-html/ /usr/local/apache2/htdocs/

EOF

Now create a public-html/ folder and write our “Hello Cosign” homepage which is what will make our app “unique”:

mkdir -p public-html/

cat > public-html/index.html << EOF

<!DOCTYPE HTML>

<html>

<head>

<meta charset="utf-8" />

<title>Hello Cosign!</title>

</head>

<body>

<h1>Hello Cosign!</h1>

</body>

</html>

EOF

Before we proceed, export your Docker Hub username, replacing johndoe below with your actual username:

echo "export DOCKERHUB_USERNAME=\"johndoe\"" >> "$HOME/.bashrc"

source "$HOME/.bashrc"

Now build our image and name it hello-cosign, giving it a tag of 0.0.1:

export APP_NAME="hello-cosign"

export VERSION="0.0.1"

docker build -t "${DOCKERHUB_USERNAME}/${APP_NAME}:${VERSION}" .

To confirm our app is working properly, let’s start a container with our app and check the output:

docker run --rm -d -p 8080:80 --name "${APP_NAME}" "${DOCKERHUB_USERNAME}/${APP_NAME}:${VERSION}"

curl localhost:8080

Sample output:

c959f8bf92921b290785611fbc9f06a1fb912beaedd0fae2adb2c50134658a18

<!DOCTYPE HTML>

<html>

<head>

<meta charset="utf-8" />

<title>Hello Cosign!</title>

</head>

<body>

<h1>Hello Cosign!</h1>

</body>

</html>

Stop the container once our app is confirmed operational:

docker stop "${APP_NAME}"

Now let’s log in to Docker Hub and push our image:

docker login -u "${DOCKERHUB_USERNAME}"

docker push "${DOCKERHUB_USERNAME}/${APP_NAME}:${VERSION}"

Time to leave our app directory:

popd

Hello Cosign!

From the README in the official repository sigstore/cosign:

Cosign supports:

- “Keyless signing” with the Sigstore public good Fulcio certificate authority and Rekor transparency log (default)

- Hardware and KMS signing

- Signing with a cosign generated encrypted private/public keypair

- Container Signing, Verification and Storage in an OCI registry.

- Bring-your-own PKI

For simplicity, we’ll go with a cosign generated encrypted private/public keypair in this demo, though one might prefer keyless signing or signing with a public cloud KMS managed key pair in a production environment.

Let’s generate our key pair:

cosign generate-key-pair

Press Enter twice to set an empty password for the private key. You should also see the output below which can be verified with ls:

Private key written to cosign.key

Public key written to cosign.pub

As usual, sign our container image with the private key and verify it later with the public key. But before that, we need to confirm the SHA256 digest of our image and refer to it during the signing process, to ensure that we are indeed signing the correct artifact!

export APP_DIGEST="$(docker images --digests | grep "${DOCKERHUB_USERNAME}/${APP_NAME}" | cut -d' ' -f9 | cut -d':' -f2)"

Now sign with cosign sign, specifying our private key cosign.key and referring to our image by SHA256 digest instead of version tag:

cosign sign --key cosign.key "${DOCKERHUB_USERNAME}/${APP_NAME}@sha256:${APP_DIGEST}"

Sample output:

Enter password for private key:

The sigstore service, hosted by sigstore a Series of LF Projects, LLC, is provided pursuant to the Hosted Project Tools Terms of Use, available at https://lfprojects.org/policies/hosted-project-tools-terms-of-use/.

Note that if your submission includes personal data associated with this signed artifact, it will be part of an immutable record.

This may include the email address associated with the account with which you authenticate your contractual Agreement.

This information will be used for signing this artifact and will be stored in public transparency logs and cannot be removed later, and is subject to the Immutable Record notice at https://lfprojects.org/policies/hosted-project-tools-immutable-records/.

By typing 'y', you attest that (1) you are not submitting the personal data of any other person; and (2) you understand and agree to the statement and the Agreement terms at the URLs listed above.

Are you sure you would like to continue? [y/N] y

tlog entry created with index: 28001047

Pushing signature to: index.docker.io/donaldsebleung/hello-cosign

Now log out of Docker Hub:

docker logout

To verify, use cosign verify but specify the public key cosign.pub instead and feel free to refer to the image by version tag since a third party verifying your image might not know or care about the SHA256 digest:

cosign verify --key cosign.pub "${DOCKERHUB_USERNAME}/${APP_NAME}:${VERSION}"

Sample output:

Verification for index.docker.io/donaldsebleung/hello-cosign:0.0.1 --

The following checks were performed on each of these signatures:

- The cosign claims were validated

- Existence of the claims in the transparency log was verified offline

- The signatures were verified against the specified public key

[{"critical":{"identity":{"docker-reference":"index.docker.io/donaldsebleung/hello-cosign"},"image":{"docker-manifest-digest":"sha256:507f40e3f0520ef9cae6d05bb3663c298c06d3c968d651536ede5ea11bd1c71a"},"type":"cosign container image signature"},"optional":{"Bundle":{"SignedEntryTimestamp":"MEUCIQDbpsPaKQIekQUAMntuXDFJXFmw2MEgMJ0/PPVcd/qKPwIgFn7qhn3yGjybDKX01rf+1O85ohB7ISVuNbKNdJbGfrg=","Payload":{"body":"eyJhcGlWZXJzaW9uIjoiMC4wLjEiLCJraW5kIjoiaGFzaGVkcmVrb3JkIiwic3BlYyI6eyJkYXRhIjp7Imhhc2giOnsiYWxnb3JpdGhtIjoic2hhMjU2IiwidmFsdWUiOiJlYWExM2I2Y2ZlNjQwZjg1Y2M3Zjg0Njc1ZmM0YmNhNmQwOWM4MTAxYjIyMmRjYjQzZDZlNzcxNDdmYWJkNDcyIn19LCJzaWduYXR1cmUiOnsiY29udGVudCI6Ik1FVUNJQzNPSHl5REtOTTAwY0l5bFg5MGwzWU1CbmQ3OUdRZWtrOVhNMGszVGh4SUFpRUFuMDJRNmxhQ25mOGsvZmNDd3ErVVROMXF2dmczdm5CbERhS1lIai96eldZPSIsInB1YmxpY0tleSI6eyJjb250ZW50IjoiTFMwdExTMUNSVWRKVGlCUVZVSk1TVU1nUzBWWkxTMHRMUzBLVFVacmQwVjNXVWhMYjFwSmVtb3dRMEZSV1VsTGIxcEplbW93UkVGUlkwUlJaMEZGU2xaV09GWldha3AwTkZKU1dFbHpOa1J1U0dRNFYzSkZaekE0WndwS1IzRnpMMmhPUVV0eFkyVmtOMHN3VEZnNVdFaGlTa05hTkc5NE56UTBORE5sTVhVMVFqSkxiek5DYVZwWGIwUjJVbEJTVDJoR1EwNW5QVDBLTFMwdExTMUZUa1FnVUZWQ1RFbERJRXRGV1MwdExTMHRDZz09In19fX0=","integratedTime":1689771460,"logIndex":28001047,"logID":"c0d23d6ad406973f9559f3ba2d1ca01f84147d8ffc5b8445c224f98b9591801d"}}}}]

Enforce workload integrity with ClusterImagePolicy

Being able to manually sign and verify images is good and all, but what we really want is for our Kubernetes cluster to automatically verify image signatures at deployment time and refuse to run the (potentially compromised) workload in case a valid signature cannot be found. This is where Sigstore’s policy-controller and ClusterImagePolicy come in - though first we need a Kubernetes cluster ;-)

Let’s get one up and running in no time using kind:

kind create cluster --name hello-sigstore

Before we get started, let’s create a few namespaces:

dev: “Development” namespaceprod: “Production” namespacecosign-system: where ourpolicy-controllerwill be installed

kubectl create ns dev

kubectl create ns prod

kubectl create ns cosign-system

Now let’s install policy-controller using Helm. Take a look at the project details on Artifact Hub, then run the following commands to add Sigstore’s Helm repository and install sigstore/policy-controller into our cosign-system namespace:

helm repo add sigstore https://sigstore.github.io/helm-charts

helm repo update

helm -n cosign-system install my-policy-controller sigstore/policy-controller --version 0.6.0

Now consider the policy described below which might resemble a real-world scenario:

- To ensure the integrity of our production workloads, we will require valid signatures on all images in the

prodnamespace - However, the

devnamespace is really for our developers to test stuff out quickly and won’t be running production workloads, so it might not make sense to enforce image signature and verification there

To enforce the policy described above, we’ll need to do two things:

- Define our

ClusterImagePolicyto require a valid signature from our key pair for all images - Using the label

policy.sigstore.dev/include=true, enforce the policy in ourprodnamespace and leave ourdevnamespace intact

Let’s write our ClusterImagePolicy and apply it to the cluster:

cat > my-image-policy.yaml << EOF

apiVersion: policy.sigstore.dev/v1beta1

kind: ClusterImagePolicy

metadata:

name: my-image-policy

spec:

images:

- glob: "**"

authorities:

- key:

hashAlgorithm: sha256

data: |

$(cat cosign.pub | sed 's/^/ /')

EOF

kubectl apply -f my-image-policy.yaml

Now apply the label policy.sigstore.dev/include=true to the prod namespace for the policy to take effect there:

kubectl label ns prod policy.sigstore.dev/include=true

Deploy a Pod with curl installed so we can conduct some tests in a moment:

kubectl run curlpod --image=curlimages/curl -- sleep infinity

Let’s also generate some common Deployment templates to be applied to both the dev and prod namespaces:

kubectl create deploy nginx \

--image=nginx \

--replicas=2 \

--port=80 \

--dry-run=client \

-o yaml > nginx.yaml

kubectl create deploy "${APP_NAME}" \

--image="${DOCKERHUB_USERNAME}/${APP_NAME}:${VERSION}" \

--replicas=2 \

--port=80 \

--dry-run=client \

-o yaml > "${APP_NAME}.yaml"

Now compare the behavior of the dev and prod namespaces. We expect the dev namespace to accept any workload (the default) and the prod namespace to accept only signed workloads.

Verify we can run unsigned workloads in the dev namespace by deploying NGINX:

kubectl -n dev apply -f nginx.yaml

Let’s expose the deployment as well, and verify we can reach the service using curl:

kubectl -n dev expose deploy nginx

kubectl exec curlpod -- curl -s nginx.dev

Sample output:

service/nginx exposed

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

For sanity, verify we can also run signed workloads in the dev namespace by deploying our app:

kubectl -n dev apply -f "${APP_NAME}.yaml"

kubectl -n dev expose deploy "${APP_NAME}"

kubectl exec curlpod -- curl -s "${APP_NAME}.dev"

Sample output:

deployment.apps/hello-cosign created

service/hello-cosign exposed

<!DOCTYPE HTML>

<html>

<head>

<meta charset="utf-8" />

<title>Hello Cosign!</title>

</head>

<body>

<h1>Hello Cosign!</h1>

</body>

</html>

Let’s delete the deployments and associated services to save on resources:

kubectl -n dev delete svc "${APP_NAME}"

kubectl -n dev delete deploy "${APP_NAME}"

kubectl -n dev delete svc nginx

kubectl -n dev delete deploy nginx

Now let’s repeat the experiments on our prod namespace.

Try to deploy our unsigned NGINX workload:

kubectl -n prod apply -f nginx.yaml

You should see the following error:

Error from server (BadRequest): error when creating "nginx.yaml": admission webhook "policy.sigstore.dev" denied the request: validation failed: failed policy: my-image-policy: spec.template.spec.containers[0].image

index.docker.io/library/nginx@sha256:08bc36ad52474e528cc1ea3426b5e3f4bad8a130318e3140d6cfe29c8892c7ef signature key validation failed for authority authority-0 for index.docker.io/library/nginx@sha256:08bc36ad52474e528cc1ea3426b5e3f4bad8a130318e3140d6cfe29c8892c7ef: no signatures found for image

This indicates our ClusterImagePolicy is in effect and rejecting Deployments using unsigned images. You can confirm that the Deployment is indeed not created by running the command kubectl -n prod get deploy.

No resources found in prod namespace.

Now confirm that we can deploy our signed app:

kubectl -n prod apply -f "${APP_NAME}.yaml"

Expose our app and curl it to confirm it is working as expected:

kubectl -n prod expose deploy "${APP_NAME}"

kubectl exec curlpod -- curl -s "${APP_NAME}.prod"

Sample output:

service/hello-cosign exposed

<!DOCTYPE HTML>

<html>

<head>

<meta charset="utf-8" />

<title>Hello Cosign!</title>

</head>

<body>

<h1>Hello Cosign!</h1>

</body>

</html>

Concluding remarks

We’ve seen how to sign and verify our own images using cosign, and how to configure a Kubernetes cluster to enforce workload signing on the namespace level using policy-controller, both under the Sigstore project dedicated to improving software supply chain security.

I hope you enjoyed this lab and stay tuned for more content :-)

![[Valid RSS]](/assets/images/valid-rss-rogers.png)

![[Valid Atom 1.0]](/assets/images/valid-atom.png)