Evaluating and securing your Kubernetes infrastructure with kube-bench

Date: 2023-08-25

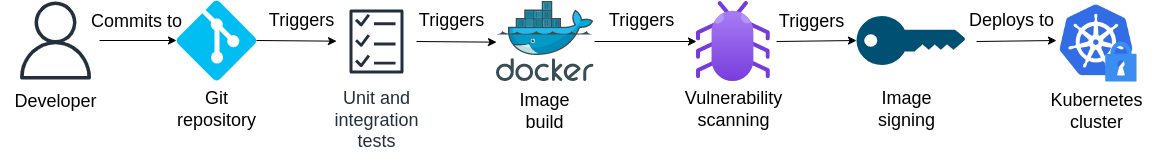

In the past few articles, we saw how to construct a complete DevOps pipeline with GitHub Actions and integrate security-oriented tools such as Grype, Sigstore Cosign and policy-controller into our pipeline to implement an end-to-end DevSecOps workflow providing a comprehensive level of protection for our applications:

- Implementing continuous delivery pipelines with GitHub Actions

- Scanning and remediating vulnerabilities with Grype

- Securing your Kubernetes workloads with Sigstore

However, no matter how well our applications are secured, the security of our entire IT environment ultimately depends on the security of our infrastructure. Therefore, in the lab to follow, we will shift our focus away from Kubernetes workloads and instead explore how we can evaluate and improve upon the security of our Kubernetes clusters with kube-bench, the industry-leading Kubernetes benchmarking solution developed by Aqua.

Lab: Evaluating and improving upon the security of a two-node Kubernetes cluster

Prerequisites

Familiarity with Kubernetes cluster administration is assumed. If not, consider enrolling in the comprehensive LFS258: Kubernetes Fundamentals online training course offered by The Linux Foundation which is also the official training course for the CKA certification exam offered by the CNCF.

Setting up your environment

It is assumed you already have a public cloud account such as an AWS account or a laptop / workstation capable of hosting at least 2 Linux nodes each with 2 vCPUs and 8G of RAM, one of which will become the master node and the other the worker node. You may follow the lab with a bare-metal setup as well, but note that we’ll re-create our entire cluster halfway through which may take additional time on bare metal.

The reference distribution is Ubuntu 22.04 LTS for which the instructions in this lab have been tested against. If you’re looking for a challenge, feel free to follow the lab with a different distribution but beware that some of the instructions may require non-trivial modification.

For the purposes of this lab, we’ll refer to our master node as master0 and worker node as worker0.

Evaluating a typical kubeadm cluster against the CIS Kubernetes benchmarks

Let’s set up a kubeadm cluster following the typical process and test it with kube-bench, which will run automated tests to evaluate our cluster against the CIS Kubernetes benchmarks, the industry standard in determining whether our Kubernetes cluster is secure.

For the purposes of this lab, we’ll assume this isn’t the first time you’ve provisioned a two-node kubeadm cluster so we’ll skip over the details and provide the commands directly get our cluster set up in minutes.

The versions of Kubernetes and associated components used in this lab are as follows:

- Kubernetes 1.28.0

- containerd 1.7.3

- runc 1.1.9

- CNI plugins 1.3.0

- Calico 3.26.1

Setting up master0

Run the following commands on master0 to perform preliminary setup and avoid issues on installing and initializing Kubernetes. Make sure to replace x.x.x.x below with the private IP address of master0.

sudo hostnamectl set-hostname master0

echo "export PATH=\"/opt/cni/bin:/usr/local/sbin:/usr/local/bin:\$PATH\"" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

sudo sed -i 's#Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin#Defaults secure_path = /opt/cni/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin#' /etc/sudoers

export K8S_CONTROL_PLANE="x.x.x.x"

echo "$K8S_CONTROL_PLANE k8s-control-plane" | sudo tee -a /etc/hosts

sudo modprobe br_netfilter

echo br_netfilter | sudo tee /etc/modules-load.d/kubernetes.conf

cat << EOF | sudo tee -a /etc/sysctl.conf

net.ipv4.ip_forward=1

EOF

sudo sysctl -p

sudo systemctl reboot

After the reboot, run the following commands to install the containerd CRI and associated components:

wget https://github.com/containerd/containerd/releases/download/v1.7.3/containerd-1.7.3-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.7.3-linux-amd64.tar.gz

sudo mkdir -p /usr/local/lib/systemd/system/

sudo wget -qO /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd.service

sudo mkdir -p /etc/containerd/

containerd config default | \

sed 's/SystemdCgroup = false/SystemdCgroup = true/' | \

sed 's/pause:3.8/pause:3.9/' | \

sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd.service

sudo mkdir -p /usr/local/sbin/

sudo wget -qO /usr/local/sbin/runc https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

sudo chmod +x /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin/

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

Now run the commands below to install Kubernetes and initialize our master node:

sudo apt update && sudo apt install -y apt-transport-https ca-certificates curl

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update && sudo apt install -y \

kubeadm=1.28.0-1.1 \

kubelet=1.28.0-1.1 \

kubectl=1.28.0-1.1

sudo apt-mark hold kubelet kubeadm kubectl

sudo systemctl enable --now kubelet.service

cat > kubeadm-config.yaml << EOF

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.28.0

controlPlaneEndpoint: "k8s-control-plane:6443"

networking:

podSubnet: "192.168.0.0/16"

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

EOF

sudo kubeadm init --config kubeadm-config.yaml

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "source <(kubectl completion bash)" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

wget -qO - https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml | \

kubectl apply -f -

Wait a minute or two, then run the command below to verify that our master0 is Ready:

kubectl get no

Sample output:

NAME STATUS ROLES AGE VERSION

master0 Ready control-plane 72s v1.28.0

Setting up worker0

Now set up our worker node worker0.

Again, the preliminary setup which also reboots our node - replace x.x.x.x again with the private IP address of master0:

sudo hostnamectl set-hostname worker0

echo "export PATH=\"/opt/cni/bin:/usr/local/sbin:/usr/local/bin:\$PATH\"" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

sudo sed -i 's#Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin#Defaults secure_path = /opt/cni/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin#' /etc/sudoers

export K8S_CONTROL_PLANE="x.x.x.x"

echo "$K8S_CONTROL_PLANE k8s-control-plane" | sudo tee -a /etc/hosts

sudo modprobe br_netfilter

echo br_netfilter | sudo tee /etc/modules-load.d/kubernetes.conf

cat << EOF | sudo tee -a /etc/sysctl.conf

net.ipv4.ip_forward=1

EOF

sudo sysctl -p

sudo systemctl reboot

Next, install containerd and associated components:

wget https://github.com/containerd/containerd/releases/download/v1.7.3/containerd-1.7.3-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.7.3-linux-amd64.tar.gz

sudo mkdir -p /usr/local/lib/systemd/system/

sudo wget -qO /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd.service

sudo mkdir -p /etc/containerd/

containerd config default | \

sed 's/SystemdCgroup = false/SystemdCgroup = true/' | \

sed 's/pause:3.8/pause:3.9/' | \

sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd.service

sudo mkdir -p /usr/local/sbin/

sudo wget -qO /usr/local/sbin/runc https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

sudo chmod +x /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin/

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

Now, install Kubernetes and initialize our worker node - replace the x’s with your Kubernetes token and CA certificate hash as shown in the output of kubeadm init on our master node master0:

sudo apt update && sudo apt install -y apt-transport-https ca-certificates curl

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update && sudo apt install -y \

kubeadm=1.28.0-1.1 \

kubelet=1.28.0-1.1

sudo apt-mark hold kubelet kubeadm

sudo systemctl enable --now kubelet.service

export K8S_TOKEN="xxxxxx.xxxxxxxxxxxxxxxx"

export K8S_CA_CERT_HASH="xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

sudo kubeadm join k8s-control-plane:6443 \

--discovery-token "${K8S_TOKEN}" \

--discovery-token-ca-cert-hash "sha256:${K8S_CA_CERT_HASH}"

Again, wait a minute or two after the setup is complete and run the command again to verify that our worker0 node is also Ready:

kubectl get no

Sample output:

NAME STATUS ROLES AGE VERSION

master0 Ready control-plane 10m v1.28.0

worker0 Ready <none> 21s v1.28.0

Verify the basic functionality of our cluster

Let’s test that our cluster can run basic workloads and that pod networking is functioning as expected.

Run the following commands on master0.

First untaint our master node so we can run application pods on it as well:

kubectl taint no master0 node-role.kubernetes.io/control-plane-

Now create an NGINX deployment with two replicas and expose it with a ClusterIP service:

kubectl create deploy nginx --image=nginx --replicas=2 --port=80

kubectl expose deploy nginx

Spin up a Pod with curl pre-installed so we can curl our nginx service:

kubectl run curlpod --image=curlimages/curl -- sleep infinity

Now curl our nginx service - we should expect to receive a response:

kubectl exec curlpod -- curl -s nginx

Sample output:

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

This confirms that our cluster is functional.

Running the kube-bench tests and analyzing the log output

Now fetch the job.yaml file from kube-bench which runs the benchmarking tool as a Kubernetes Job:

wget https://raw.githubusercontent.com/aquasecurity/kube-bench/main/job.yaml

Before applying this YAML file, let’s add a spec.nodeName field to schedule it to our master0 node:

echo ' nodeName: master0' >> job.yaml

Apply the YAML file to create the job:

kubectl apply -f job.yaml

Wait about 10-15 seconds for the job to complete:

kubectl get job

Once the job completes, fetch the logs for the corresponding kube-bench-xxxxx pod. Replace the xxxxx placeholder as appropriate and remember - kubectl tab completion is your friend ;-)

kubectl logs kube-bench-xxxxx

Take some time to scroll through and comprehend the output. For the purposes of this lab though, we’ll ignore the numerous warnings emitted by kube-bench and focus on just resolving the failed tests which can be shown succinctly with the command below - again, replcae the xxxxx placeholder as appropriate:

kubectl logs kube-bench-xxxxx | grep FAIL

Sample output:

[FAIL] 1.1.12 Ensure that the etcd data directory ownership is set to etcd:etcd (Automated)

[FAIL] 1.2.5 Ensure that the --kubelet-certificate-authority argument is set as appropriate (Automated)

[FAIL] 1.2.17 Ensure that the --profiling argument is set to false (Automated)

[FAIL] 1.2.18 Ensure that the --audit-log-path argument is set (Automated)

[FAIL] 1.2.19 Ensure that the --audit-log-maxage argument is set to 30 or as appropriate (Automated)

[FAIL] 1.2.20 Ensure that the --audit-log-maxbackup argument is set to 10 or as appropriate (Automated)

[FAIL] 1.2.21 Ensure that the --audit-log-maxsize argument is set to 100 or as appropriate (Automated)

[FAIL] 1.3.2 Ensure that the --profiling argument is set to false (Automated)

[FAIL] 1.4.1 Ensure that the --profiling argument is set to false (Automated)

9 checks FAIL

0 checks FAIL

0 checks FAIL

[FAIL] 4.1.1 Ensure that the kubelet service file permissions are set to 600 or more restrictive (Automated)

1 checks FAIL

0 checks FAIL

10 checks FAIL

Here’s what each failing test means:

- “Ensure that the etcd data directory ownership is set to etcd:etcd” - the etcd data directory at

/var/lib/etcd/and its contents should be owned by theetcdsystem account which should be created if not exists - “Ensure that the –kubelet-certificate-authority argument is set as appropriate” - by default, the kubelet server certificate is self-signed and the kube-apiserver does not verify the authenticity of the certificate when communicating with the kubelet - this implies that the API server to kubelet connection is insecure and cannot be safely run over a public network. By replacing the kubelet’s server certificate with one signed by a trusted CA (as specified in the API server’s

--kubelet-certificate-authorityargument), the communication is secure and can then be safely run over a public network - refer to the official documentation for details - “Ensure that the –profiling argument is set to false” - the profiling feature of the kube-apiserver, kube-controller-manager and kube-scheduler exposes sensitive information about the cluster which could be exploited by a malicious actor. Setting it to

falseremediates this issue - “Ensure that the –audit-log-path argument is set” - audit logs for the kube-apiserver is off by default. Turning it on and specifying an appropriate path such as

/var/log/apiserver/audit.logallows an administrator to periodically review it for potentially indicators of compromise - “Ensure that the –audit-log-maxage argument is set to 30 or as appropriate” - ditto

- “Ensure that the –audit-log-maxbackup argument is set to 10 or as appropriate” - ditto

- “Ensure that the –audit-log-maxsize argument is set to 100 or as appropriate” - ditto

- “Ensure that the kubelet service file permissions are set to 600 or more restrictive” - the kubelet service file located at

/lib/systemd/system/kubelet.serviceon Ubuntu has its permissions set to0644by default which is insecure - this can be easily remediated by manually setting it to0600

If we run the same test on our worker node - run these commands on the master0 node (perhaps counter-intuitively):

sed -i 's/master0/worker0/' job.yaml

kubectl replace --force -f job.yaml

Wait 10-15 seconds for it to finish, then grep our pod logs again for failures:

kubectl logs kube-bench-xxxxx | grep FAIL

We’ll see that our worker node only fails the kubelet.service test:

[FAIL] 4.1.1 Ensure that the kubelet service file permissions are set to 600 or more restrictive (Automated)

1 checks FAIL

0 checks FAIL

1 checks FAIL

Remediation overview

Except the failures related to file and directory permissions which can be remediated directly, the rest should be configured through the kubeadm-config.yaml file passed to kubeadm on the master node for cluster initialization - here’s what our updated kubeadm-config.yaml file looks like:

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.28.0

controlPlaneEndpoint: "k8s-control-plane:6443"

networking:

podSubnet: "192.168.0.0/16"

apiServer:

extraArgs:

profiling: "false"

audit-log-path: /var/log/apiserver/audit.log

audit-log-maxage: "30"

audit-log-maxbackup: "10"

audit-log-maxsize: "100"

kubelet-certificate-authority: /etc/kubernetes/pki/ca.crt

controllerManager:

extraArgs:

profiling: "false"

scheduler:

extraArgs:

profiling: "false"

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

serverTLSBootstrap: true

Apart from the usual fields, notice the following new fields:

ClusterConfiguration.apiServer.extraArgs- here is where we pass our extra command-line arguments to the kube-apiserver - the keys are the flag names without the leading double dash--and the values are the option valuesClusterConfiguration.controllerManager.extraArgs- ditto but for kube-controller-managerClusterConfiguration.scheduler.extraArgs- ditto but for kube-schedulerKubeletConfiguration.serverTLSBootstrap: true- this instructskubeadmto sign the kubelet server certificates with the Kubernetes cluster CA key instead of using the default of generating a self-signed certificate. In conjunction with the--kubelet-certificate-authority=/etc/kubernetes/pki/ca.crtargument passed to the kube-apiserver, this secures communication between the API server and kubelet so the connection could in theory be served securely over the public Internet

Refer to the official documentation for details regarding the kubeadm-config.yaml configuration file.

Provisioning a secure two-node kubeadm cluster

Armed with this knowledge, let’s delete our cluster and re-create it with security hardening in mind.

Setting up master0

Preliminary setup (replace x.x.x.x with the private IP address of master0):

sudo hostnamectl set-hostname master0

echo "export PATH=\"/opt/cni/bin:/usr/local/sbin:/usr/local/bin:\$PATH\"" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

sudo sed -i 's#Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin#Defaults secure_path = /opt/cni/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin#' /etc/sudoers

export K8S_CONTROL_PLANE="x.x.x.x"

echo "$K8S_CONTROL_PLANE k8s-control-plane" | sudo tee -a /etc/hosts

sudo modprobe br_netfilter

echo br_netfilter | sudo tee /etc/modules-load.d/kubernetes.conf

cat << EOF | sudo tee -a /etc/sysctl.conf

net.ipv4.ip_forward=1

EOF

sudo sysctl -p

sudo systemctl reboot

Installing containerd:

wget https://github.com/containerd/containerd/releases/download/v1.7.3/containerd-1.7.3-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.7.3-linux-amd64.tar.gz

sudo mkdir -p /usr/local/lib/systemd/system/

sudo wget -qO /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd.service

sudo mkdir -p /etc/containerd/

containerd config default | \

sed 's/SystemdCgroup = false/SystemdCgroup = true/' | \

sed 's/pause:3.8/pause:3.9/' | \

sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd.service

sudo mkdir -p /usr/local/sbin/

sudo wget -qO /usr/local/sbin/runc https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

sudo chmod +x /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin/

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

Installing Kubernetes and initializing the control plane:

sudo apt update && sudo apt install -y apt-transport-https ca-certificates curl

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update && sudo apt install -y \

kubeadm=1.28.0-1.1 \

kubelet=1.28.0-1.1 \

kubectl=1.28.0-1.1

sudo apt-mark hold kubelet kubeadm kubectl

sudo chmod 600 /lib/systemd/system/kubelet.service

sudo useradd -r -c "etcd user" -s /sbin/nologin -M etcd -U

sudo systemctl enable --now kubelet.service

cat > kubeadm-config.yaml << EOF

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

kubernetesVersion: v1.28.0

controlPlaneEndpoint: "k8s-control-plane:6443"

networking:

podSubnet: "192.168.0.0/16"

apiServer:

extraArgs:

profiling: "false"

audit-log-path: /var/log/apiserver/audit.log

audit-log-maxage: "30"

audit-log-maxbackup: "10"

audit-log-maxsize: "100"

kubelet-certificate-authority: /etc/kubernetes/pki/ca.crt

controllerManager:

extraArgs:

profiling: "false"

scheduler:

extraArgs:

profiling: "false"

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

serverTLSBootstrap: true

EOF

sudo kubeadm init --config kubeadm-config.yaml

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "source <(kubectl completion bash)" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

sudo chmod 700 /var/lib/etcd

sudo chown -R etcd: /var/lib/etcd

wget -qO - https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml | \

kubectl apply -f -

Verify the master is ready with kubectl get no.

Setting up worker0

Preliminary setup (replace x.x.x.x with the private IP for master0):

sudo hostnamectl set-hostname worker0

echo "export PATH=\"/opt/cni/bin:/usr/local/sbin:/usr/local/bin:\$PATH\"" >> "$HOME/.bashrc" && \

source "$HOME/.bashrc"

sudo sed -i 's#Defaults secure_path = /sbin:/bin:/usr/sbin:/usr/bin#Defaults secure_path = /opt/cni/bin:/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin#' /etc/sudoers

export K8S_CONTROL_PLANE="x.x.x.x"

echo "$K8S_CONTROL_PLANE k8s-control-plane" | sudo tee -a /etc/hosts

sudo modprobe br_netfilter

echo br_netfilter | sudo tee /etc/modules-load.d/kubernetes.conf

cat << EOF | sudo tee -a /etc/sysctl.conf

net.ipv4.ip_forward=1

EOF

sudo sysctl -p

sudo systemctl reboot

Installing containerd:

wget https://github.com/containerd/containerd/releases/download/v1.7.3/containerd-1.7.3-linux-amd64.tar.gz

sudo tar Cxzvf /usr/local containerd-1.7.3-linux-amd64.tar.gz

sudo mkdir -p /usr/local/lib/systemd/system/

sudo wget -qO /usr/local/lib/systemd/system/containerd.service https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo systemctl daemon-reload

sudo systemctl enable --now containerd.service

sudo mkdir -p /etc/containerd/

containerd config default | \

sed 's/SystemdCgroup = false/SystemdCgroup = true/' | \

sed 's/pause:3.8/pause:3.9/' | \

sudo tee /etc/containerd/config.toml

sudo systemctl restart containerd.service

sudo mkdir -p /usr/local/sbin/

sudo wget -qO /usr/local/sbin/runc https://github.com/opencontainers/runc/releases/download/v1.1.9/runc.amd64

sudo chmod +x /usr/local/sbin/runc

sudo mkdir -p /opt/cni/bin/

wget https://github.com/containernetworking/plugins/releases/download/v1.3.0/cni-plugins-linux-amd64-v1.3.0.tgz

sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.3.0.tgz

Installing Kubernetes and initializing the worker node (replace the placeholders marked with x’s with the corresponding Kubernetes token and CA certificate hash):

sudo apt update && sudo apt install -y apt-transport-https ca-certificates curl

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.28/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.28/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update && sudo apt install -y \

kubeadm=1.28.0-1.1 \

kubelet=1.28.0-1.1

sudo apt-mark hold kubelet kubeadm

sudo chmod 600 /lib/systemd/system/kubelet.service

sudo systemctl enable --now kubelet.service

export K8S_TOKEN="xxxxxx.xxxxxxxxxxxxxxxx"

export K8S_CA_CERT_HASH="xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx"

sudo kubeadm join k8s-control-plane:6443 \

--discovery-token "${K8S_TOKEN}" \

--discovery-token-ca-cert-hash "sha256:${K8S_CA_CERT_HASH}"

Verify that the worker node is ready with kubectl get no.

Verify the basic functionality of our cluster

Now follow the same steps as with the original cluster above or devise your own tests to verify that this hardened cluster is indeed functioning as expected. But before that, we need to sign the certificate signing requests (CSR) generated for the kubelet server certificates to complete the configuration for secured communication from the API server to the kubelets, so that our API server can properly manage Pods through the kubelet:

for csr in $(kubectl get csr | grep Pending | awk '{ print $1 }'); do

kubectl certificate approve $csr

done

Sample output:

certificatesigningrequest.certificates.k8s.io/csr-96t9n approved

certificatesigningrequest.certificates.k8s.io/csr-mhfft approved

certificatesigningrequest.certificates.k8s.io/csr-x5t2m approved

Running the kube-bench tests to verify our cluster is now secure

Let’s download the job.yaml from kube-bench once more and assign it to run on the master0 node:

wget https://raw.githubusercontent.com/aquasecurity/kube-bench/main/job.yaml

echo ' nodeName: master0' >> job.yaml

kubectl apply -f job.yaml

Wait 10-15 seconds or poll with kubectl get job until complete. Now view the failed tests through the logs (replace the xxxxx placeholder as appropriate):

kubectl logs kube-bench-xxxxx | grep FAIL

Sample output:

[FAIL] 1.1.12 Ensure that the etcd data directory ownership is set to etcd:etcd (Automated)

1 checks FAIL

0 checks FAIL

0 checks FAIL

0 checks FAIL

0 checks FAIL

1 checks FAIL

Notice the test for etcd data directory ownership is still failing - we’ll get back to that in a moment. But all the other tests have passed!

Now for our worker node - run these on the master node:

sed -i 's/master0/worker0/' job.yaml

kubectl replace --force -f job.yaml

Wait 10-15 seconds or poll with kubectl get job until complete, then grep the failing tests again:

kubectl logs kube-bench-xxxxx | grep FAIL

You should see no failures at all:

0 checks FAIL

0 checks FAIL

0 checks FAIL

Regarding the failing etcd data directory ownership check

If you check the /var/lib/etcd/ directory and its contents, you’ll see they’re already owned by etcd:etcd:

ls -l /var/lib/ | grep etcd

Sample output:

drwx------ 3 etcd etcd 4096 Aug 25 15:06 etcd

The test is erroneously failing since kube-bench is run inside a Pod which does not share the host PID namespace. This is a known issue and not yet resolved at the time of writing - see aquasecurity/kube-bench#1275 on GitHub for details.

Concluding remarks and going further

We’ve seen how to run CIS Kubernetes benchmark tests on an existing two-node kubeadm cluster with kube-bench, how to interpret the failing tests and how to address them by augmenting the kubeadm configuration with the appropriate fields and parameters. With the help of kube-bench, we can easily discover and remediate security-related issues at the cluster level which serves as a basis for securing our Kubernetes applications and workloads running atop.

I hope you enjoyed this article and stay tuned for more content ;-)

![[Valid RSS]](/assets/images/valid-rss-rogers.png)

![[Valid Atom 1.0]](/assets/images/valid-atom.png)